Managing files might be a complicated task if there are a large number of duplicate files present in the system. Large volume of duplicate files occupies the storage space and may create disk full issues. Easiest way to deal with duplicate files is to locate them and delete manually but using a tool to find and delete duplicate files can significantly make the process easier. In this article, we will learn some useful utility tools to find and delete duplicate files from the Linux system.

Fslint

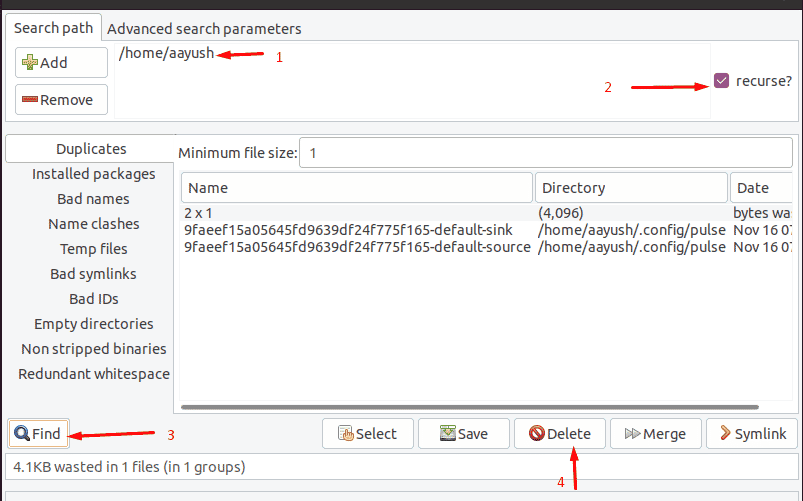

In Linux/Unix based systems, there is a very useful tool called “fslint” which helps to search and remove duplicate files, temp files, empty folders completely and free up the disk space. Fslint comes with both GUI and CLI mode which makes it easier to use. Installation of this utility tool is simple and easy. Just run the following command and start using the tool

Ubuntu/Debian

$ sudo apt-get install fslint

In the Ubuntu 20.04 LTS, fslint is not available in the default repository. Use the following command to install the tool.

$ mkdir -p ~/Downloads/fslint

$ cd ~/Downloads/fslint

$ wget http://old-releases.ubuntu.com/ubuntu/pool/universe/p/pygtk/python-gtk2_2.24.0-6_amd64.deb

$ wget http://old-releases.ubuntu.com/ubuntu/pool/universe/p/pygtk/python-glade2_2.24.0-6_amd64.deb

$ wget http://old-releases.ubuntu.com/ubuntu/pool/universe/f/fslint/fslint_2.46-1_all.deb

$ sudo apt-get install ./*.deb

RHEL/CentOS

$ sudo yum install epel-release

$ sudo yum install fslint

Once the installation is completed, look for fslink in the application. Provide the directory to scan the files and tick recurse option to scan files in the subdirectories recursively . Once the scan is completed, click delete to remote the duplicate files.

Rdfind

Rdfind is an open source and free utility tool to find duplicate files. It compares the files based on the content and differentiates the original and duplicate files using a classification algorithm. Once the duplicate file is found, it provides a report as a result.

Run the following command to install the tool

Ubuntu/Debian

$ sudo apt-get install rdfind

RHEL/CentOS

$ sudo yum install epel-release

$ sudo yum install rdfind

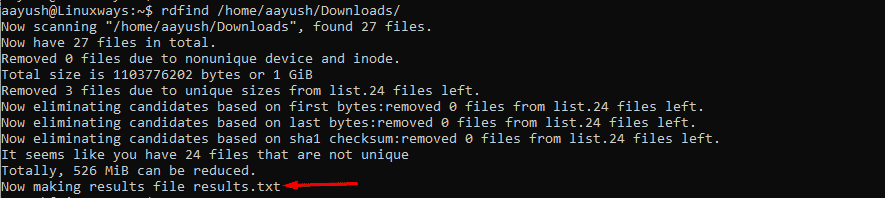

Once the tool is installed, run the rdfind command along with the directory path where we want to find duplicate files. In this example, I have used /home/aayush/Download is being used. You can have your own assumption.

Syntax

$ rdfind <Path>

Example

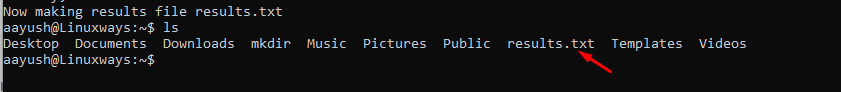

$ rdfind /home/aayush/Downloads

A report file called results.txt is generated in the current work directory. Find the file for more details related to duplicate files.

Fdupes

Fdupes is another useful utility tool available in Linux systems. It is free and open source and written in C programming language. This utility tool identifies duplicate files by comparing file sizes, partial MD5 signature, full MD5 signature and performing byte by byte comparison for the verification.

Run the following command to install the tool

Ubuntu/Debian

$ sudo apt-get install fdupes

RHEL/Centos

$ yum install epel-release

$ yum install fdupes

Once the tool is installed, run the fdupes command along with the path where we want to find the duplicate files.

Syntax

$ fdupes <path>

Example

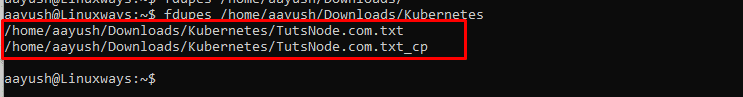

$ fdupes /home/aayush/Downloads/Kubernetes

To search the duplicate files in the subdirectories, run the fdupes command with the option -r along with the path.

Syntax

$ fdupes <Path> -r

Example

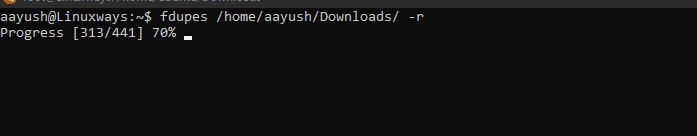

$ fdupes /home/aayush/Downloads -r

Output:

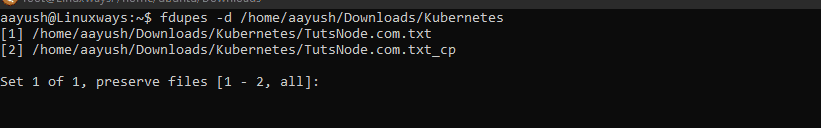

To remove all the duplicates, run fdupes command with the option -d along with the path.

Syntax

$ fdupes -d <Path>

Example

$ fdupes -d /home/aayush/Downloads/Kubernetes

Output

To delete all the duplicates in the subdirectories, run the fdupes command recursively(-r) with the option -d as.

Syntax

$ fdupes -d <path> -r

Example

$ fdupes -d /home/aayush/Downloads -r

To get more help on fdupes command, run the following command.

$ fdupes --help

$ man fdupes

Conclusion

Sometimes, having duplicate files in the system might create a really big issue. In this article, I have covered different tools to find and delete duplicate files in the linux system. Thank you for reading.